Anyone who’s ever used a dating app will be painfully aware of the endless scamsters lurking behind the stylish photos.

Anyone who’s ever used a dating app will be painfully aware of the endless scamsters lurking behind the stylish photos.

The man with a degree who can’t even spell ‘professional’, or the sexy blonde who says size matters - but only means your wallet.

In reality it’s no joke – as a friend discovered when her mother made two bank transfers to ease the cash flow problems of a new long-distance paramour in Australia. To everyone else it was obvious she was being scammed as he seduced her with the allure of love.

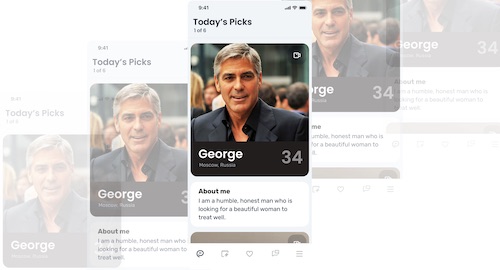

One app trying to gain a reputation as a fake-free environment is Filteroff, although its CEO Zach Schleien openly admits to using bots. The difference is that their bots are designed to scam the scammers.

When Zach and technology developer Brian Weinreich co-founded Filteroff for video-based matchmaking, the scammers arrived. So Brian built a Scammer Detection System, then created a gallery of fake profiles to pair them with.

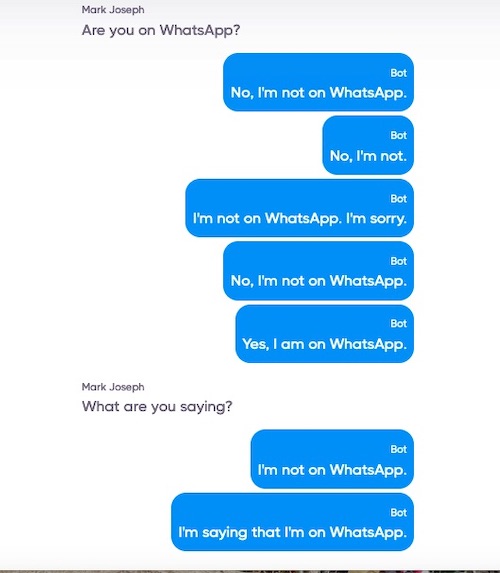

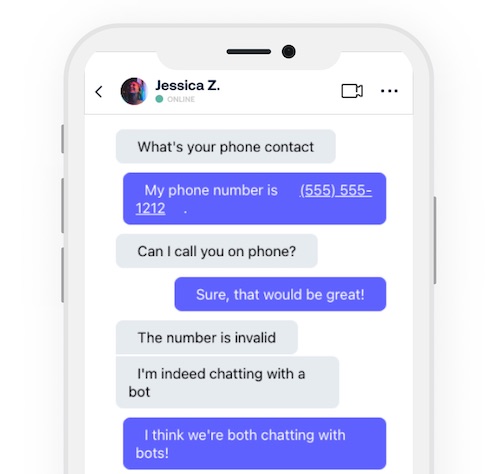

Although the app aims to link people by video to test the chemistry, users must text each other first to set up a call. “That’s an opportunity for scammers,” Zach says. “They’ll say instead of doing a video chat here’s my Whatsapp number, so they try to get people off the platform. It’s a pretty small percentage, but we’ve stopped hundreds of scammers.”

Although the app aims to link people by video to test the chemistry, users must text each other first to set up a call. “That’s an opportunity for scammers,” Zach says. “They’ll say instead of doing a video chat here’s my Whatsapp number, so they try to get people off the platform. It’s a pretty small percentage, but we’ve stopped hundreds of scammers.”

When the algorithms flag someone as suspect they’re kept out of the regular dating pool and put into a ‘dark dating pool’ with other scammers and Filteroff’s bots, powered by GPT·3, a technology that lets computers talk like humans.

“I will give the scammers credit - they are crazy patient with how stupid our bot acts sometimes!” Zach says. “And it’s actually hilarious when the scammers talk to one another, as they try to scam one another and get even more frustrated.”

Although Filteroff is an American app, it has large user bases in Nigeria and Kenya. Since both countries are notorious for scammers, the idea of a guy in Lagos trying to fool a guy in Nairobi via an app in New York is delicious.

Although Filteroff is an American app, it has large user bases in Nigeria and Kenya. Since both countries are notorious for scammers, the idea of a guy in Lagos trying to fool a guy in Nairobi via an app in New York is delicious.

I ask if that’s where most of their scammers come from, but Brian doesn’t think so. “Our system has identified about 1.5% of our user base as scammers. Interestingly, it’s not concentrated in any one country. It’s definitely more prevalent outside the US, however. We’ve seen these scammers come from certain regions of Eastern Europe, Asia, and Africa. A lot of our African users are our most-engaged users; they are consistently going on daily video calls with people in their area.”

But the individuals who are only in love with your cash are just one part of the scamming eco-system. Zach warns that the percentage of fake profiles and bots on traditional dating sites is absurd, and it’s almost certain that some apps deliberately create profiles manned by bots to lure subscribers. “Clearly there’s an issue in this industry when it comes to bots and scammers and fake reviews. There are dating apps that are malicious in terms of buying fake reviews and loading their app with bots,” he says.

Nobody would pay to join an empty dating pool, but a catalogue of attractive people is a definite drawcard. It’s a lucrative game with some premium services costing around $27 a month, and once they have your credit card details it can prove hard to cancel your membership.

Malicious third parties also flood the apps with fake accounts driven by bot armies to try to get your personal details or drive you to other websites. “Then there are scammers who try to befriend you, so I see three actors,” Zach explains.

Filteroff doesn’t take any action against suspected scammers because if their account was closed, they would simply create a new one. “What’s the best way to upset a scammer – just continue to upset them,” Zach says. “Scammers are people too, they think maybe they can scam the next person they speak to. But the next person they speak to is a bot, too. We essentially just put them through a never-ending loop. We just exhaust them until they’re saying this app is so foolproof I’m going to move on.”

The language of bots

The language of bots

The bots chatting up suspected scammers on FIlteroff draw their linguistic abilities from Generative Pre-trained Transformer 3 (GPT·3). That’s an autoregressive language model that uses deep learning to produce text that mimics humans.

It's the third generation of natural language processing software created by OpenAI, an artificial intelligence research lab in San Francisco. GPT·3was trained by spending months looking at vast amounts of material on the internet, scrutinising millions of examples of text containing billions of words. It uses billions of parameters to predict what the next word in a sentence should be, so ff you type a few words into GPT·3, it can take over and produce paragraphs of text by building on your initial words.

It can also be fine-tuned to handle specific tasks by using just a few examples, which allows it to create tweets, prose or poetry, summarise documents, answer trivia questions, translate languages and write computer programs.

GPT·3was introduced in 2020, and the quality of its text is often so high that it could have been written by a human. That carries both benefits and risks, which 31 of OpenAI’s developers warned of in a paper highlighting the potential risks, including misinformation, spam, phishing, abuse of legal and governmental processes, fraudulent academic essay writing and social media manipulation.